Introduction

It’s been a while since my last post here, in which I wrote about a tool to enforce coding standards. Many things have changed from then until now, not only in my career but also in my personal life - I might address them in a future post. One of those changes is reflected here, in the language of the posts. From now on, it is time to switch to the most spoken language in the world, to not only encourage myself to write and think in English but also to encourage all you IT folks to read in that language and give me feedback whenever possible.

Nowadays, the cloud is no longer just a gamble but a reality that brings many benefits. These range from the possibility of quickly experimenting with new features paying on demand, to scale to serve millions of subscribers like Netflix, which, according to Sandvine is responsible for 15% of global internet traffic, with over 100.000 live instances.

Furthermore, despite heavily relying on Cloud Foundry in my last professional experience, which the product is likely to run on AWS, we didn’t have direct access to AWS services, not even Storage (S3) and databases (RDS), which we used extensively. Finally, job postings for more experienced developers and technical leaders often require knowledge of one of the cloud platforms Microsoft Azure, Google GCP, or AWS, with the last being the most common.

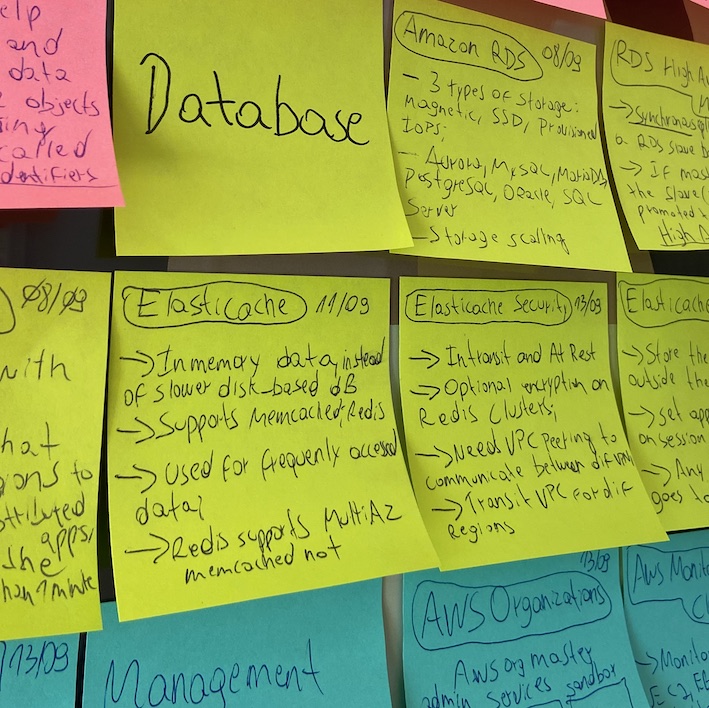

For these reasons, I decided to delve deeper and study for the AWS Solutions Architect Associate certification. In doing so, I pursued knowledge not only to use key services but also to be capable of designing the architecture of an application, involving important pillars such as performance, security, resilience, and cost-effectiveness.

Takeaways

Here’s an overview of the services and features that caught my attention on this learning journey:

-

Tags: Tags are key and value pairs that act as metadata for organizing your AWS resources. They can help you manage, identify, organize, search for, and filter resources. You can also define permissions based on tags. Say, for example, you have an environment for tests, where developers can run thousands of tests and the output is a file uploaded to AWS S3; What about creating a lifecycle rule to expire files with the tag Environment=Test after 30 days? That’s possible!

-

AWS Organizations: Enables you to consolidate multiple AWS accounts into an organization that you create and centrally manage.

-

S3 Lifecycle: You can add rules in an S3 Lifecycle configuration to tell Amazon S3 to transition objects to another Amazon S3 storage class. For example, you can add a rule to move the objects after 30 days of their creation to S3 Glacier and save money.

-

S3 Object Lock: You can store objects using a write-once-read-many (WORM) model. Object Lock can help prevent objects from being deleted or overwritten for a fixed amount of time or indefinitely. Let’s say, your application has a bug and tries to delete files that it was not supposed to do. So, this rule could be an important guard to protect your files.

-

S3 Event Notifications: You can use this feature to receive notifications when certain events happen in your S3 bucket: New object-created events, Object removal events, and so on.

-

Amazon Athena: It’s a query service that makes it easy to analyze data directly in Amazon S3 using standard SQL.

-

S3 File Gateway: Provides your applications a file interface to seamlessly store files as objects in Amazon S3, and access them using industry-standard file protocols. Useful for migrating on-premises file data to Amazon S3, while maintaining fast local access to recently accessed data.

-

DynamoDB Streams: It captures a time-ordered sequence of item-level modifications in any DynamoDB table and stores this information in a log for up to 24 hours. Applications can access this log and view the data items as they appeared before and after they were modified, in near-real time.

-

Amazon Aurora: Relational database built for the cloud with full MySQL and PostgreSQL compatibility. You can also use Aurora Serverless, which enables you to run your database in the cloud without worrying about database capacity management.

-

Amazon SQS: Fully managed message queue and topic services that scale almost infinitely and provide simple, easy-to-use APIs. You can interact with Amazon SQS using the industry standard Java Message Service (JMS) API. This interface lets you use Amazon SQS as the JMS provider with minimal code changes.

-

Amazon MQ: Managed message broker service for Apache ActiveMQ and RabbitMQ that makes it easy to set up and operate message brokers in the cloud. Amazon MQ is suitable for enterprise IT pros, developers, and architects who are managing a message broker themselves–whether on-premises or in the cloud–and want to move to a fully managed cloud service without rewriting the messaging code in their applications.

-

AWS Lambda: Event-driven, serverless computing platform provided by Amazon as a part of Amazon Web Services. It is designed to enable developers to run code without provisioning or managing servers. It executes code in response to events and automatically manages the computing resources required by that code.

-

Amazon API Gateway: Fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. APIs act as the “front door” for applications to access data, business logic, or functionality from your backend services. Using API Gateway, you can create RESTful APIs and WebSocket APIs that enable real-time two-way communication applications. API Gateway supports containerized and serverless workloads, as well as web applications.

-

Amazon Cognito: Identity platform for web and mobile apps. It’s a user directory, an authentication server, and an authorization service for OAuth 2.0 access tokens and AWS credentials. With Amazon Cognito, you can authenticate and authorize users from the built-in user directory, from your enterprise directory, and consumer identity providers like Google and Facebook.

-

Machine Learning: Amazon has impressive services built to make things only humans could do in the near past. Polly can turn text into lifelike speech for uncountable languages. Rekognition lets you easily build applications to search, verify, organize images, and detect objects, scenes, faces, and so on. Transcribe can add speech-to-text capability to your applications with automatic speech recognition. These are just some of the dozens of services provided by Amazon.

-

X-ray: This service helps developers analyze and debug production, distributed applications, such as those built using a microservices architecture. With X-Ray, you can understand how your application and its underlying services are performing to identify and troubleshoot the root cause of performance issues and errors.

-

Amazon EventBridge: Serverless Event Bus to build event-driven apps at scale. It provides real-time access to changes in data in AWS services, your own applications, and software-as-a-service (SaaS) applications without writing code. EventBridge also automatically ingests events from over 200 AWS services without requiring developers to create any resources in their account.

-

AWS Database Migration Services: Cloud service that makes it possible to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. To migrate to a different database engine, you can use DMS Schema Conversion. This service automatically assesses and converts your source schemas to a new target engine.

-

AWS DataSync: AWS DataSync enables you to transfer datasets to and from on-premises storage, other cloud providers, and AWS Storage services. DataSync can connect to existing storage systems and data sources with standard storage protocols (NFS, SMB), as an HDFS client, using the Amazon S3 API, or using other cloud storage APIs. It’s the best choice for migrating data, archiving cold data, and replicating data.

-

AWS Backup: Service that centralizes and automates data protection across AWS services like S3, EC2, RDS, etc. It includes dashboards to track backup activities such as backup, copy, and restore jobs. It also provides a centralized console, automated backup scheduling, backup retention management, and backup monitoring and alerting.

-

AWS Kinesis: Family of services for processing and analyzing real-time streaming data at a large scale. Collect terabytes of data per day from application and service logs, clickstream data, sensor data, and in-app user events to power live dashboards, generate metrics, and deliver data into data lakes.

Conclusion

It’s worth mentioning the impressive potential that comes with AWS which goes beyond just platform as a service and also includes software as a service capability. EventBridge is one of those greate services, which you can seamlessly incorporate new services and not just applications to handle events as the product evolves and new features emerge, all with no programming! That said, the biggest concern in using all these services could be the lock-in with AWS. On the other hand, it might be challenging to achieve independence from a cloud provider, and a better option is to think abou a modularized solution with concise interfaces and layers (aka high cohesion, low coupling), thus facilitating a potential migration.

Although studying for the certification exam has been quite long, this post was supposed to be an abstract. That’s why I ended up not mentioning important services such as SES, VPC, Trail, CloudWatch, Redshift, Parameter Store, KMS, etc. I’ve got services that compound the certification exam preparation and ignite my curiosity for study soon. If you, dear reader, want to know further details regarding some feature or certification tips or suggestions, please let me know through the comments section.